Here’s how DeepSeek Censorship Truly Works-and Easy Methods to get Rou…

페이지 정보

본문

Start your journey with DeepSeek in the present day and expertise the way forward for intelligent technology. With employees also calling DeepSeek's fashions 'wonderful,' the US software vendor weighed the potential dangers of hosting AI technology developed in China earlier than finally deciding to supply it to shoppers, mentioned Christian Kleinerman, Snowflake's executive vice president of product. Businesses can integrate the mannequin into their workflows for various tasks, starting from automated buyer assist and content material generation to software program development and knowledge evaluation. Available now on Hugging Face, the model offers users seamless access by way of web and API, and it seems to be probably the most advanced giant language mannequin (LLMs) currently accessible within the open-source panorama, according to observations and checks from third-social gathering researchers. R1's success highlights a sea change in AI that would empower smaller labs and researchers to create competitive models and diversify the choices. The final five bolded fashions had been all announced in a few 24-hour period just before the Easter weekend. "Despite their obvious simplicity, these issues typically involve complex resolution methods, making them glorious candidates for constructing proof data to enhance theorem-proving capabilities in Large Language Models (LLMs)," the researchers write. The move signals DeepSeek-AI’s dedication to democratizing access to superior AI capabilities.

Start your journey with DeepSeek in the present day and expertise the way forward for intelligent technology. With employees also calling DeepSeek's fashions 'wonderful,' the US software vendor weighed the potential dangers of hosting AI technology developed in China earlier than finally deciding to supply it to shoppers, mentioned Christian Kleinerman, Snowflake's executive vice president of product. Businesses can integrate the mannequin into their workflows for various tasks, starting from automated buyer assist and content material generation to software program development and knowledge evaluation. Available now on Hugging Face, the model offers users seamless access by way of web and API, and it seems to be probably the most advanced giant language mannequin (LLMs) currently accessible within the open-source panorama, according to observations and checks from third-social gathering researchers. R1's success highlights a sea change in AI that would empower smaller labs and researchers to create competitive models and diversify the choices. The final five bolded fashions had been all announced in a few 24-hour period just before the Easter weekend. "Despite their obvious simplicity, these issues typically involve complex resolution methods, making them glorious candidates for constructing proof data to enhance theorem-proving capabilities in Large Language Models (LLMs)," the researchers write. The move signals DeepSeek-AI’s dedication to democratizing access to superior AI capabilities.

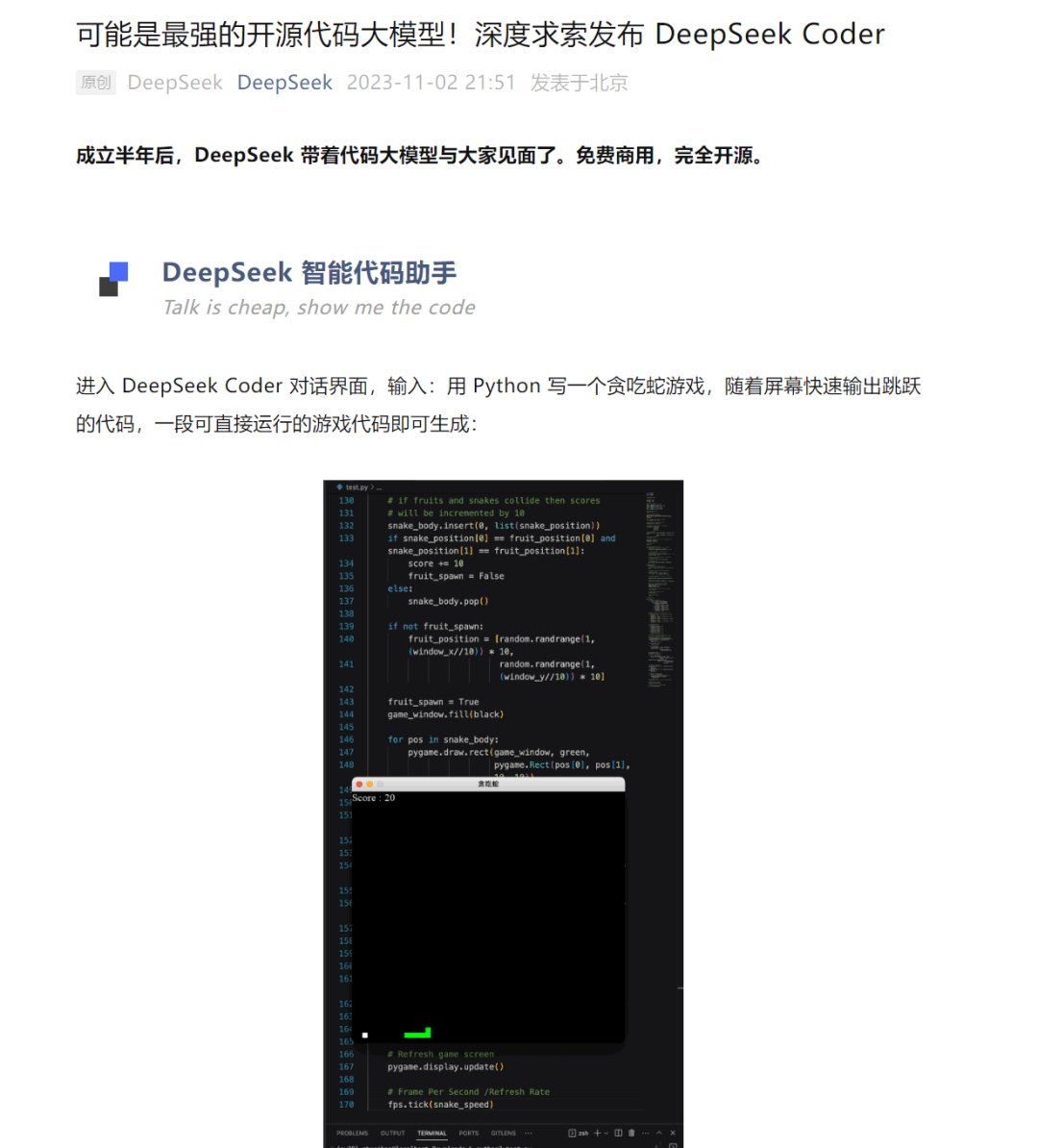

For additional safety, limit use to units whose entry to send information to the general public internet is restricted. "Through a number of iterations, the model educated on large-scale synthetic information turns into considerably more highly effective than the initially below-skilled LLMs, leading to higher-quality theorem-proof pairs," the researchers write. DeepSeek Coder supplies the ability to submit current code with a placeholder, in order that the mannequin can complete in context. • We'll persistently study and refine our model architectures, aiming to further improve each the training and inference effectivity, striving to method efficient support for infinite context length. A standard use case in Developer Tools is to autocomplete primarily based on context. A typical use case is to complete the code for the user after they provide a descriptive remark. Absolutely outrageous, and an unimaginable case research by the research crew. The praise for DeepSeek-V2.5 follows a nonetheless ongoing controversy around HyperWrite’s Reflection 70B, which co-founder and CEO Matt Shumer claimed on September 5 was the "the world’s top open-supply AI mannequin," in accordance with his inner benchmarks, solely to see these claims challenged by impartial researchers and the wider AI analysis neighborhood, who have up to now failed to reproduce the acknowledged results. The model’s open-supply nature additionally opens doors for additional research and growth.

For additional safety, limit use to units whose entry to send information to the general public internet is restricted. "Through a number of iterations, the model educated on large-scale synthetic information turns into considerably more highly effective than the initially below-skilled LLMs, leading to higher-quality theorem-proof pairs," the researchers write. DeepSeek Coder supplies the ability to submit current code with a placeholder, in order that the mannequin can complete in context. • We'll persistently study and refine our model architectures, aiming to further improve each the training and inference effectivity, striving to method efficient support for infinite context length. A standard use case in Developer Tools is to autocomplete primarily based on context. A typical use case is to complete the code for the user after they provide a descriptive remark. Absolutely outrageous, and an unimaginable case research by the research crew. The praise for DeepSeek-V2.5 follows a nonetheless ongoing controversy around HyperWrite’s Reflection 70B, which co-founder and CEO Matt Shumer claimed on September 5 was the "the world’s top open-supply AI mannequin," in accordance with his inner benchmarks, solely to see these claims challenged by impartial researchers and the wider AI analysis neighborhood, who have up to now failed to reproduce the acknowledged results. The model’s open-supply nature additionally opens doors for additional research and growth.

Then, in tandem with AI chip concerns, development cost is one other cause of the disruption. Reporting by the brand new York Times gives further proof in regards to the rise of huge-scale AI chip smuggling after the October 2023 export control update. It also gives a reproducible recipe for creating training pipelines that bootstrap themselves by beginning with a small seed of samples and generating larger-high quality training examples as the fashions develop into more succesful. • They implemented an FP8 combined precision coaching framework, which reduces reminiscence usage and accelerates coaching compared to increased precision formats. DeepSeek-V2.5’s architecture consists of key improvements, such as Multi-Head Latent Attention (MLA), which significantly reduces the KV cache, thereby enhancing inference pace without compromising on model performance. Run the Model: Use Ollama’s intuitive interface to load and interact with the DeepSeek-R1 model. Find out how to put in DeepSeek-R1 locally for coding and logical drawback-solving, no month-to-month fees, no data leaks.

Here’s a side-by-aspect comparability of how deepseek ai china-R1 answers the identical query-"What’s the great Firewall of China? It was also simply a little bit bit emotional to be in the same form of ‘hospital’ because the one that gave delivery to Leta AI and GPT-3 (V100s), ChatGPT, GPT-4, DALL-E, and way more. I wish to keep on the ‘bleeding edge’ of AI, however this one came faster than even I was prepared for. By making DeepSeek-V2.5 open-supply, DeepSeek-AI continues to advance the accessibility and potential of AI, cementing its function as a pacesetter in the sector of giant-scale models. AI engineers and knowledge scientists can construct on DeepSeek-V2.5, creating specialized models for area of interest functions, or additional optimizing its efficiency in particular domains. It might probably actually do away with the pop-ups. We can convert the info that we've got into different formats in order to extract the most from it. However, both tools have their own strengths.

If you cherished this write-up and you would like to acquire far more facts pertaining to deep seek kindly pay a visit to our own web site.

- 이전글What's The Job Market For Secondary Glazing Installers Professionals Like? 25.02.03

- 다음글Where Can You Get The Most Effective Automatic Fold Up Mobility Scooter Information? 25.02.03

댓글목록

등록된 댓글이 없습니다.