DeepSeek-Coder-V2: Breaking the Barrier of Closed-Source Models In Cod…

페이지 정보

본문

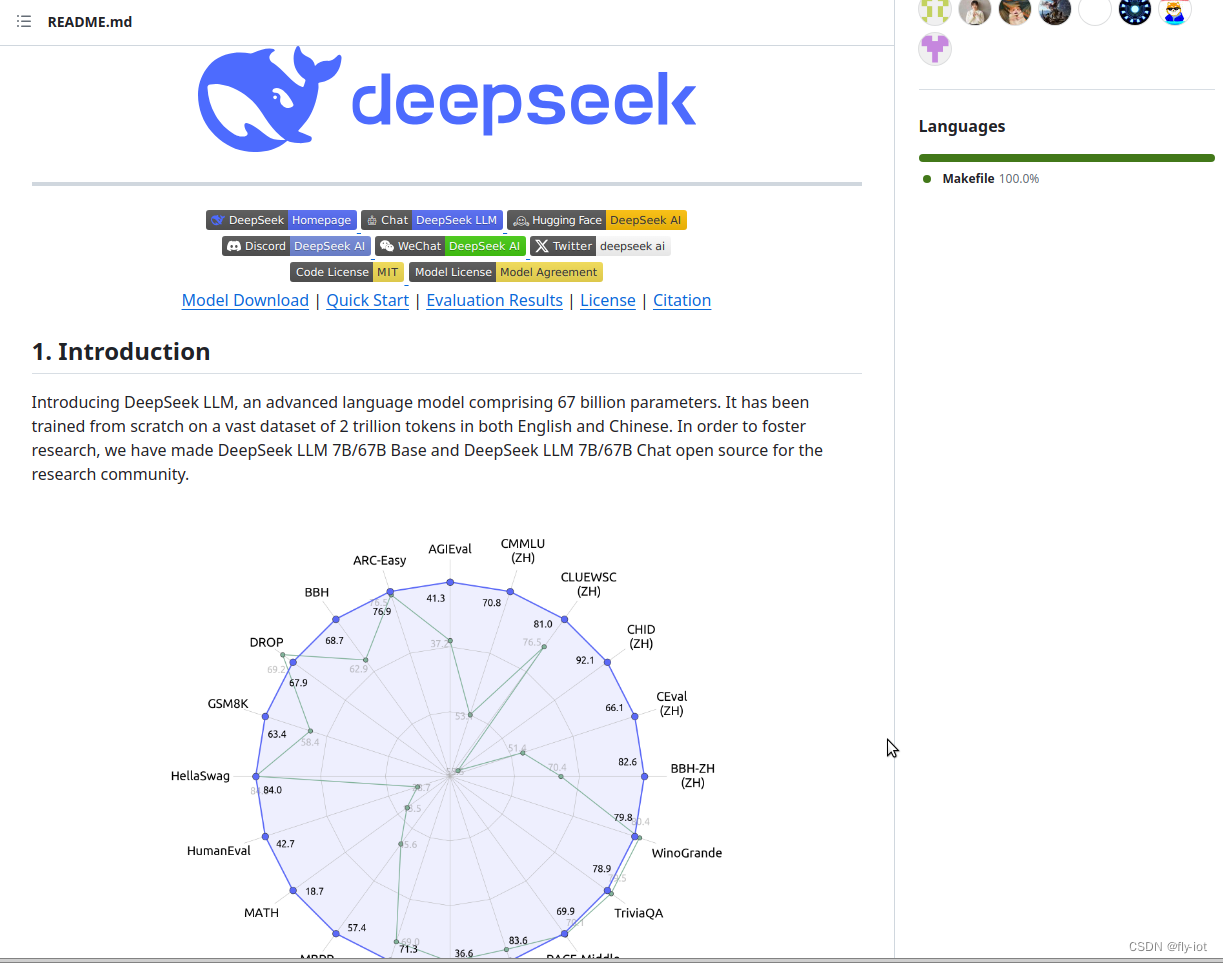

Despite the controversies, Deepseek Online chat online has dedicated to its open-source philosophy and proved that groundbreaking know-how would not always require large budgets. While its breakthroughs are little doubt spectacular, the latest cyberattack raises questions on the security of emerging technology. As technology continues to evolve at a speedy pace, so does the potential for tools like DeepSeek to shape the future panorama of knowledge discovery and search applied sciences. They then used that mannequin to create a bunch of training data to train smaller models (the Llama and Qewn distillations). In distinction, nonetheless, it’s been persistently confirmed that giant fashions are higher when you’re actually training them in the primary place, that was the whole concept behind the explosion of GPT and OpenAI. As for Chinese benchmarks, aside from CMMLU, a Chinese multi-subject multiple-choice job, DeepSeek-V3-Base additionally reveals better efficiency than Qwen2.5 72B. (3) Compared with LLaMA-3.1 405B Base, the biggest open-source mannequin with 11 instances the activated parameters, DeepSeek-V3-Base also exhibits significantly better performance on multilingual, code, and math benchmarks. They then gave the model a bunch of logical questions, like math questions. They used this information to train DeepSeek-V3-Base on a set of top quality ideas, they then move the mannequin through another round of reinforcement studying, which was similar to that which created DeepSeek-r1-zero, but with extra data (we’ll get into the specifics of your complete coaching pipeline later).

We won’t be covering DeepSeek-V3-Base in depth in this text, it’s value a discussion inside itself, however for now we will think of DeepSeek-V3-Base as a giant transformer (671 Billion trainable parameters) that was skilled on top quality textual content information in the everyday fashion. The Pulse is a sequence covering insights, patterns, and traits within Big Tech and startups. To catch up on China and robotics, try our two-part series introducing the business. With a robust give attention to innovation, efficiency, and open-source improvement, it continues to lead the AI business. Reinforcement studying, in it’s most simple sense, assumes that if you bought a great result, your complete sequence of events that lead to that outcome had been good. If you bought a bad end result, the whole sequence is unhealthy. Because AI fashions output probabilities, when the model creates a great end result, we attempt to make the entire predictions which created that outcome to be more confident.

We won’t be covering DeepSeek-V3-Base in depth in this text, it’s value a discussion inside itself, however for now we will think of DeepSeek-V3-Base as a giant transformer (671 Billion trainable parameters) that was skilled on top quality textual content information in the everyday fashion. The Pulse is a sequence covering insights, patterns, and traits within Big Tech and startups. To catch up on China and robotics, try our two-part series introducing the business. With a robust give attention to innovation, efficiency, and open-source improvement, it continues to lead the AI business. Reinforcement studying, in it’s most simple sense, assumes that if you bought a great result, your complete sequence of events that lead to that outcome had been good. If you bought a bad end result, the whole sequence is unhealthy. Because AI fashions output probabilities, when the model creates a great end result, we attempt to make the entire predictions which created that outcome to be more confident.

When the mannequin creates a bad consequence, we can make these outputs much less assured. As beforehand discussed in the foundations, the primary manner you train a model is by giving it some enter, getting it to foretell some output, then adjusting the parameters in the mannequin to make that output more seemingly. I’m planning on doing a comprehensive article on reinforcement learning which is able to go through extra of the nomenclature and ideas. The most important bounce in performance, probably the most novel ideas in Deep Seek, and the most complicated ideas in the DeepSeek paper all revolve round reinforcement studying. Deviation From Goodness: In the event you prepare a mannequin using reinforcement studying, it might learn to double down on strange and doubtlessly problematic output. Synthesize 200K non-reasoning knowledge (writing, factual QA, self-cognition, translation) using DeepSeek-V3. Similarly, DeepSeek-V3 showcases distinctive efficiency on AlpacaEval 2.0, outperforming both closed-source and open-source models. Lets get an idea of what each of these models is about. That is the place issues get attention-grabbing. It could swap languages randomly, it will create human incomparable output, and it could often endlessly repeat things. Instead of stuffing all the things in randomly, you pack small groups neatly to fit better and discover things easily later.

This especially confuses individuals, as a result of they rightly surprise how you should use the same data in coaching once more and make it higher. Basically, because reinforcement studying learns to double down on sure forms of thought, the initial mannequin you employ can have an incredible impact on how that reinforcement goes. In latest weeks, many people have asked for my thoughts on the DeepSeek v3-R1 fashions. This is often seen as an issue, however DeepSeek-R1 used it to its profit. China’s dominance in solar PV, batteries and EV production, nonetheless, has shifted the narrative to the indigenous innovation perspective, with native R&D and homegrown technological developments now seen as the first drivers of Chinese competitiveness. However, there are a couple of potential limitations and areas for additional research that may very well be thought of. However, it is value noting that this doubtless consists of additional bills beyond training, such as analysis, data acquisition, and salaries. Models skilled on lots of information with a whole lot of parameters are, generally, better. So, you're taking some knowledge from the web, cut up it in half, feed the start to the mannequin, and have the mannequin generate a prediction. Well, the thought of reinforcement learning is fairly straightforward, but there are a bunch of gotchas of the method which have to be accomodated.

If you beloved this short article and you would like to acquire more information relating to deepseek français kindly go to the web-page.

- 이전글You'll Be Unable To Guess Large 2 Seater Fabric Sofa's Tricks 25.03.06

- 다음글대전 정품비아그라 시알리스 구매처 【 Vcee.top 】 25.03.06

댓글목록

등록된 댓글이 없습니다.