Fighting For Deepseek: The Samurai Way

페이지 정보

본문

DeepSeek, kktix.com, maps, displays, and gathers knowledge throughout open, deep internet, and darknet sources to provide strategic insights and information-driven evaluation in important topics. Deepseek Online chat helps organizations decrease these dangers by intensive knowledge analysis in deep web, darknet, and open sources, exposing indicators of authorized or ethical misconduct by entities or key figures associated with them. Together with alternatives, this connectivity additionally presents challenges for businesses and organizations who must proactively protect their digital property and respond to incidents of IP theft or piracy. Armed with actionable intelligence, individuals and organizations can proactively seize opportunities, make stronger choices, and strategize to satisfy a range of challenges. Organizations and businesses worldwide must be ready to swiftly respond to shifting economic, political, and social developments with the intention to mitigate potential threats and losses to personnel, belongings, and organizational performance. If you’re a brand new user, create an account utilizing your electronic mail or social login options.

DeepSeek, kktix.com, maps, displays, and gathers knowledge throughout open, deep internet, and darknet sources to provide strategic insights and information-driven evaluation in important topics. Deepseek Online chat helps organizations decrease these dangers by intensive knowledge analysis in deep web, darknet, and open sources, exposing indicators of authorized or ethical misconduct by entities or key figures associated with them. Together with alternatives, this connectivity additionally presents challenges for businesses and organizations who must proactively protect their digital property and respond to incidents of IP theft or piracy. Armed with actionable intelligence, individuals and organizations can proactively seize opportunities, make stronger choices, and strategize to satisfy a range of challenges. Organizations and businesses worldwide must be ready to swiftly respond to shifting economic, political, and social developments with the intention to mitigate potential threats and losses to personnel, belongings, and organizational performance. If you’re a brand new user, create an account utilizing your electronic mail or social login options.

As half of a larger effort to improve the quality of autocomplete we’ve seen DeepSeek-V2 contribute to each a 58% enhance within the number of accepted characters per consumer, in addition to a discount in latency for both single (76 ms) and multi line (250 ms) recommendations. If e.g. each subsequent token offers us a 15% relative reduction in acceptance, it may be possible to squeeze out some extra gain from this speculative decoding setup by predicting a number of extra tokens out. Right now, a Transformer spends the same amount of compute per token regardless of which token it’s processing or predicting. DeepSeek v3 only makes use of multi-token prediction as much as the second subsequent token, and the acceptance charge the technical report quotes for second token prediction is between 85% and 90%. This is kind of spectacular and may enable nearly double the inference pace (in models of tokens per second per user) at a set value per token if we use the aforementioned speculative decoding setup. This seems intuitively inefficient: the mannequin ought to assume more if it’s making a harder prediction and fewer if it’s making a better one.

It doesn’t look worse than the acceptance probabilities one would get when decoding Llama three 405B with Llama three 70B, and may even be higher. I feel it’s doubtless even this distribution is not optimum and a greater selection of distribution will yield better MoE fashions, but it’s already a significant improvement over simply forcing a uniform distribution. But is the essential assumption here even true? The fundamental thought is the following: we first do an ordinary ahead cross for next-token prediction. Figure 3: An illustration of DeepSeek v3’s multi-token prediction setup taken from its technical report. This enables them to make use of a multi-token prediction objective throughout training as a substitute of strict subsequent-token prediction, they usually exhibit a efficiency enchancment from this variation in ablation experiments. Multi-head attention: In accordance with the workforce, MLA is outfitted with low-rank key-value joint compression, which requires a much smaller quantity of key-value (KV) cache throughout inference, thus decreasing reminiscence overhead to between 5 to 13 p.c in comparison with conventional strategies and offers better efficiency than MHA. We are able to iterate this as a lot as we like, though DeepSeek v3 solely predicts two tokens out during coaching. The final change that DeepSeek v3 makes to the vanilla Transformer is the power to predict multiple tokens out for every ahead move of the mannequin.

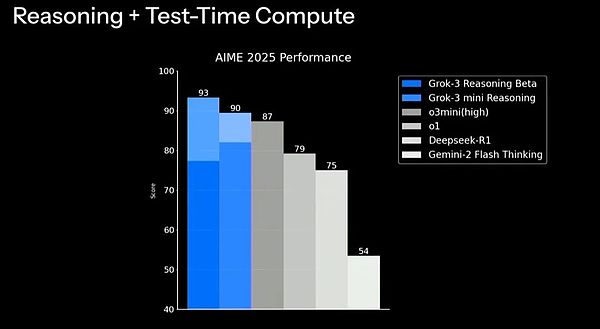

We are able to generate just a few tokens in every forward pass after which show them to the model to resolve from which level we have to reject the proposed continuation. The benchmarks are pretty spectacular, but for my part they actually only show that DeepSeek-R1 is definitely a reasoning model (i.e. the extra compute it’s spending at test time is definitely making it smarter). 3. Synthesize 600K reasoning knowledge from the interior mannequin, with rejection sampling (i.e. if the generated reasoning had a mistaken ultimate reply, then it's eliminated). Integrate person feedback to refine the generated take a look at information scripts. The CodeUpdateArena benchmark is designed to test how effectively LLMs can update their own data to keep up with these real-world adjustments. Virtue is a pc-primarily based, pre-employment character test developed by a multidisciplinary group of psychologists, vetting specialists, behavioral scientists, and recruiters to screen out candidates who exhibit red flag behaviors indicating a tendency in direction of misconduct. Through in depth mapping of open, darknet, and deep internet sources, DeepSeek zooms in to hint their web presence and identify behavioral pink flags, reveal criminal tendencies and actions, or another conduct not in alignment with the organization’s values. Making sense of big information, the deep internet, and the dark web Making info accessible by means of a mixture of cutting-edge technology and human capital.

- 이전글The 10 Most Scariest Things About Tallula Indigo Park Mollie Macaw 25.02.24

- 다음글10 Reasons That People Are Hateful To French Bulldog French Bulldog 25.02.24

댓글목록

등록된 댓글이 없습니다.